Pull data from any source – databases, APIs, files, you name it.

Ensure fast movement of these data sources across any format with automatic schema migration. No more integration headaches.

The system intelligence that turns complex data workflows into seamless automations.

Pull data from any source – databases, APIs, files, you name it.

Ensure fast movement of these data sources across any format with automatic schema migration. No more integration headaches.

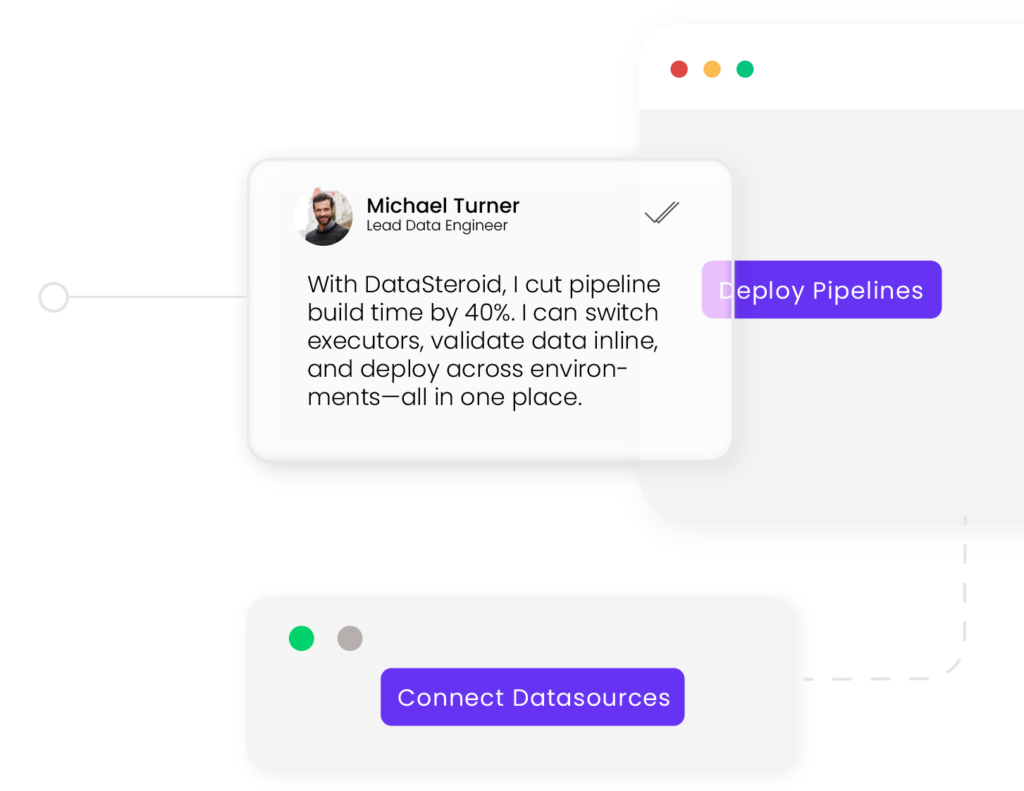

Clean, merge, and reshape your data through an intuitive visual interface.

Leverage our drag-and-drop functionality to build data pipelines without scripting and automatically transform data to your desired format. What used to take weeks now takes minutes.

Automated testing, error handling, and monitoring keep your pipelines running smoothly.

Our automated data lineage tracking helps you maintain complete visibility of your pipelines, while our pre-built governance presets makes compliance easy. Sleep better at night.

Align and verify data across CRM, ERP, and warehouse systems using configurable visual blocks—no custom scripting needed.

Prepare, transform, and export time-series data to your ML workflows or BI tools—without engineering overhead.

Ingest and transform product and user data to feed into your recommendation models or APIs.

Unify siloed systems into a consistent dataset with version control, governance, and audit trails built-in.

Set up anomaly detection-ready pipelines with data streaming, quality checks, and high-throughput executors.